This section describes interfacing MonAMI with Ganglia. To try the configuration within this section you will need to have Ganglia available. Setting up Ganglia is easy, but is outside the remit of this tutorial. Further details, including Ganglia tutorials and binary packages, are available from the Ganglia project site.

This section will start with simple filesystem monitoring and build into a demonstration of full monitoring of Torque and Maui monitoring. This example can be adapted to monitor other systems that MonAMI supports, such as DPM, MySQL, Tomcat...

Configuration file

As before, copy the following configuration file as

/etc/monami.d/example.conf.

## ## MonAMI by Example, Section 6 ## # Our root filesystem [filesystem] name = root-fs location = / cache = 2 ❶ # Our /home filesystem [filesystem] name = home-fs location = /home cache = 2 ❶ # Once a minute, record / and /home available space. [sample] read = root-fs.capacity.available, \ home-fs.capacity.available write = ganglia interval = 1m ❷ # Ganglia target that accepts data [ganglia] ❸

Some points to note:

The cache attributes enforce a “never more than” policy: never more than once every two seconds. | |

MonAMI controls how often data in Ganglia is updated: updating data once a minute is a common choice. | |

The ganglia plugin will attempt to read from the

|

Running the example

It is recommended (although not essential) that the Ganglia

monitoring daemon gmond is running on the machine. The gmond

daemon monitors many low-level facilities and also provides a

common place for Ganglia configuration: the file

gmond.conf. If the

gmond.conf is not in a standard location, you

can specify where to find it using the config attribute. If

gmond isn't installed, you can specify how to send Ganglia

metric update messages using other attributes within the

ganglia target. Refer to the MonAMI User Guide or the

monami.conf(5) manual page for further

details.

When run, MonAMI will emit the corresponding UDP multicast packets containing the

metric information. The various gmond daemons that are

listening will pick up the metrics and, when queries by the

gmetad daemon, will present the latest information.

The gmetad daemon will poll gmond daemons periodically. By

default this is 15 seconds, although it can be altered through the

gmetad.conf configuration file. This means

that the new metrics defined in the above example, under the

default Ganglia configuration, may take up to 75 seconds to be

visible in the web front-end.

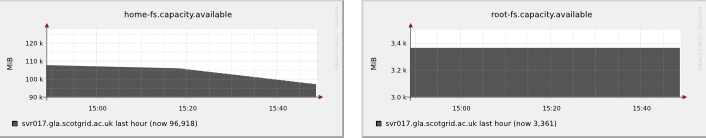

Sample results

To view the results, look at the Ganglia page (the Host-specific

view) for server that is running MonAMI. You should see two

additional graphs towards the bottom of the page entitled

“root-fs.capacity.available”

and

“home-fs.capacity.available”.

Here is an example:

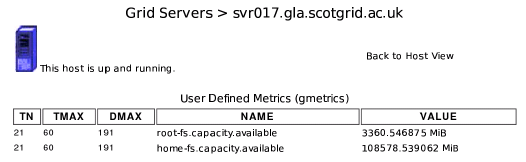

Ganglia also provides a list of extra metrics on the Gmetrics web-page. This page has a linked to from the Host view: follow the “Gmetrics” link on the left side. On the Gmetrics page, you should see two entries underneath the “User Defined Metrics (gmetrics)” title.

Each metric reported to Ganglia is named after its path within the datatree. MonAMI keeps track of the units for each metric. This information is passed on to Ganglia, allowing it to display the current value with the appropriate units.

Dealing with old data

The figure above shows a table where the first three columns are

TN,

TMAX and

DMAX. The

TN value is the number of seconds

since the metric was last updated. If the webpage is reloaded,

TN will increase until a new

metric value is received by Ganglia.

TMAX indicates the freshness of a

metric. If TN exceeds

TMAX, then Ganglia is expecting a

new value. However, TMAX is only

advisory: Ganglia takes no action when

TN exceeds

TMAX.

Delays in getting new data

Sometimes TN will exceed

TMAX. Bear in mind that the

PHP web-page queries gmetad to obtain information. In turn,

gmetad will poll one or more gmond instances periodically

(by default, every 15 seconds). This may introduce a delay

between new metric values being sent and becoming visible within

the web-pages.

DMAX indicates for how long an

old metric should be retained. If

TN exceeds

DMAX then Ganglia will consider

that that metric is no longer being monitored. Therefore, it will

discard information about that metric. Historic data (the RRD

files from which the graphs are drawn) will be kept, but the

corresponding graphs will no longer be displayed. If fresh metric

values become available, then Ganglia will start redisplaying the

metric's graphs and the historic data may contain a gap.

Choosing a value for DMAX is a

compromise. Too short an interval risks metrics being dropped

accidentally if a data-source takes an unusually long time to

provide information, whilst too long an interval results in

unnecessary delay between a metric no longer being monitored and

Ganglia dropping that metric.

Automatic dropping of old metrics can be disabled by setting

DMAX to zero. If this is done,

then there is no risk of Ganglia mistakenly dropping a metric.

However, if a metric receives no further updates, Ganglia will

continue to plot the last value indefinitely (or until gmond

gmetad daemons are restarted, in that order). Unless the

daemons are restarted, false data will be displayed, providing a

potential source of confusion.

Calculating DMAX

MonAMI will calculate an estimate for

DMAX based on observed

behaviour of the monitoring targets. A fresh estimate is

calculated for each metric update. If the monitoring

environment changes, MonAMI will adjust the corresponding

metrics' DMAX value, allowing

Ganglia to adapt to changes in the underlying monitoring

system's behaviour.

Preventing metric-update loss

An issue with providing monitoring information for Ganglia is how to deal with large numbers of metrics. MonAMI can provide very detailed information, resulting in a large number of metrics. This can be a problem for Ganglia.

The current Ganglia architecture requires each metric update be

sent as an individual metric-update message. On a

moderate-to-heavily loaded machine, there is a chance that gmond

may not be scheduled to run as the messages arrive. If this

happens, the incoming messages will be placed within the network

buffer. Once the buffer is full, any subsequent metric-update

messages will be lost. This places a limit on how may metric

update messages can be sent in one go. For 2.4-series Linux

kernels the limit is around 220 metric-update messages; for

2.6-series kernels, the limit is around 400.

Trying not to cause problems.

The ganglia plugin tries to minimise the risk by

sending metric-update messages in bursts of 200 metric updates

(so less than the 220 metric-update limit on 2.4-series kernels)

with a short pause between successive bursts. The time between

bursts gives the gmond daemons time to react. There are

attributes that fine-tune this behaviour, which the User Guide

discusses.

One simple solution is to split the set of metrics into subsets. If these subsets are updated independently and none have sufficent metrics to overflow the network buffer, then there will be no metric-update message loss. If more than one system is to be monitored, this splitting is easily and naturally achieved.

The following example shows a configuration for monitoring a local Torque and Maui installation. The configuration demonstrates how two sample stanzas can isolate the monitoring work. This spreads the monitoring load and reduces the impact on Ganglia.

## ## MonAMI by Example, Section 6. ## Torque and Maui monitoring ## [torque] cache = 60 ❶ [maui] user = root ❷ cache = 60 ❶ [sample] ❸ read = torque.Jobs, torque.Queues.Execution write = ganglia interval = 1m [sample] ❸ read = maui, !maui.Fairshare.User write = ganglia interval = 1m [ganglia] ❹

Some points:

Make sure we never query the Torque or Maui services more than once a minute. | |

The user attribute specifies as which user the MonAMI

maui plugin should claim to be running; the value

| |

The Torque and Maui monitoring are done independently. | |

We assume that Ganglia |

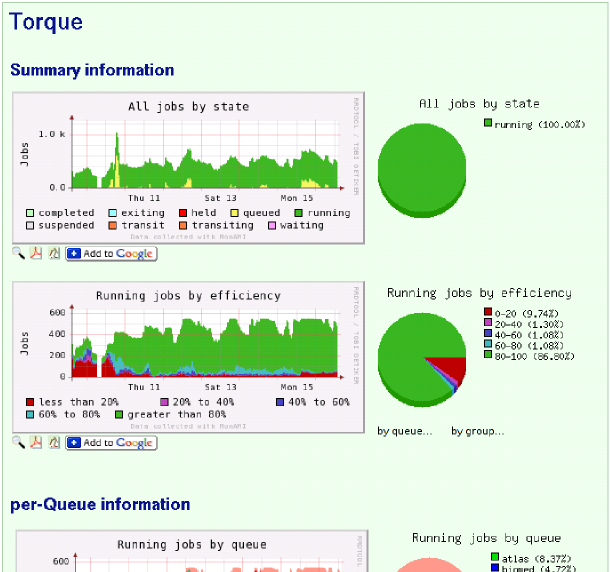

The following shows the gmetric page, showing a subset of the available metrics:

Producing complex graphs

MonAMI can produce a large number of metrics. The standard Ganglia web front-end shows a single graph (torwards the bottom of page) for each metric. Although these single-metric graphs are functional, they help little towards understanding the “bigger picture” if there are a large number of metrics being recorded.

Ganglia's web front-end contains a number of graphs that aggregate

metrics provided by the gmond daemon. One such graph shows the

number of runnable processes, the 1-minute load average and the

number of CPUs. Another shows the total in-core memory of the

machine, split by usage. These graphs provide a good overview of

the computer's current behaviour and greatly assist in diagnosing

problems as they arise.

Unfortunately, these default aggregation graphs are hard-coded into the PHP. There is currently no standard way to extend the Ganglia web front-end to include custom graphs. There is also no method to specify that certain graphs should be displayed for only one particular machine: the one that is running MonAMI collecting the interesting metrics.

The “external” package

MonAMI provides monitoring information for any number of monitoring systems. Strictly speaking, its job is done once data is within those systems. However, to get the most out of any particular system, you may need to tweak the monitoring system, or expand some scripts to better match the breadth of data MonAMI provides.

The external package contains a number of application-specific instructions, sample configuration files and modules. It is both a reference point for using MonAMI with particular monitoring systems and a platform with which to explore what is possible.

The section of the “external” package for Ganglia includes a PHP framework called multiple-graphs. This includes support for frames (in which multiple graphs and tables can be included) and pop-ups (allowing the display of context-specific information). The package also includes support for host-specific graphs.

The following figure shows the multiple-graphs framework in action: some of the Torque monitoring data is shown as graphs and pie-charts.

The data is provided by MonAMI running with a configuration similar to the above example. The graphs and pie-charts are generated using the multiple-graphs library. The exact PHP configuration for generating the Torque and Maui frame is also available within the “externals” package as one of the examples. These are documented and include installation instructions.