Table of Contents

- 1. Introduction

- 2. Running MonAMI

- 3. Configuring MonAMI

- 4. Security

- 5. Further Information

List of Figures

- 1.1. Illustration of MonAMI architecture

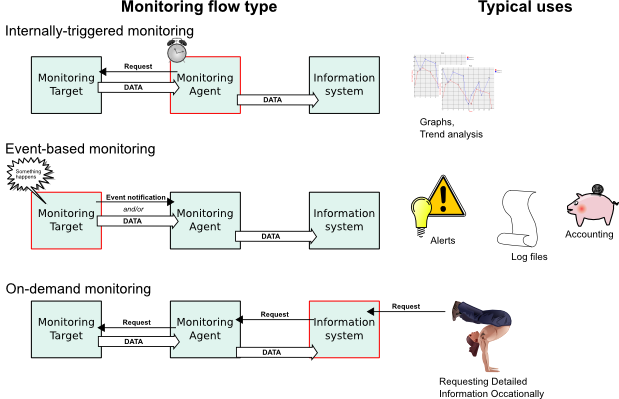

- 1.2. Illustration of the three data flows

- 3.1. Data from DPM displayed within Ganglia.

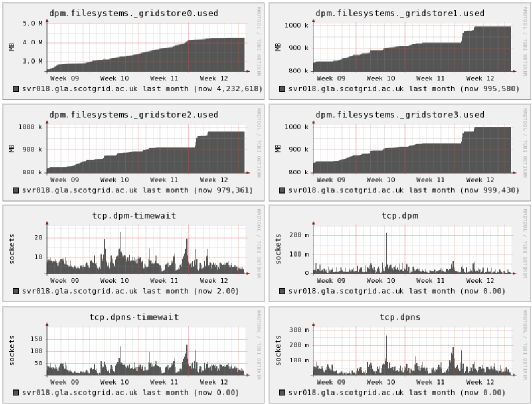

- 3.2. Ganglia graphs showing data from dpm and tcp targets

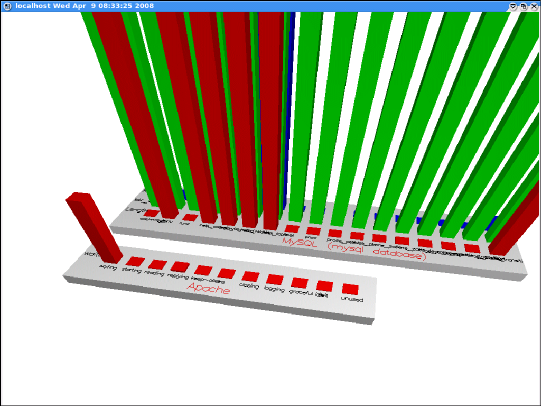

- 3.3. gr_Monitor showing data from apache and mysql targets

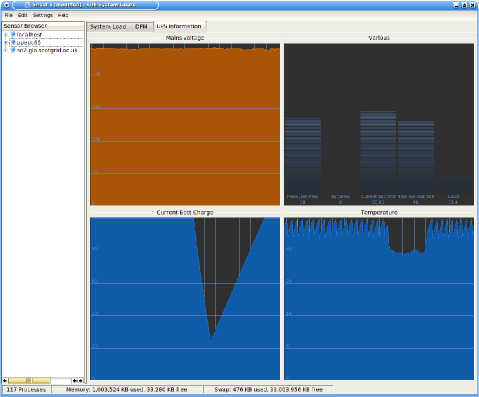

- 3.4. KSysGuard showing data from the nut plugin

- 3.5. Example deployment with key elements of MonALISA shown.

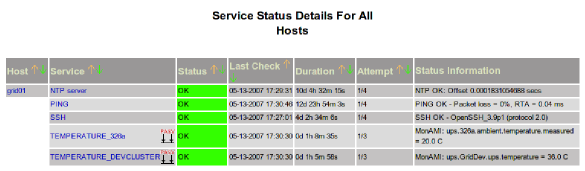

- 3.6. Nagios service status page showing two MonAMI-provided outputs.

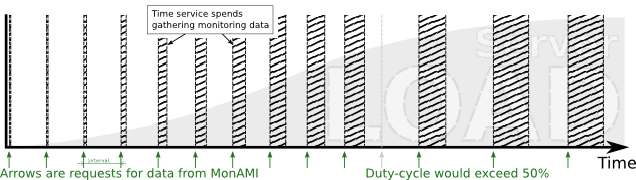

- 3.7. Adaptive monitoring increasing sampling interval in response to excessive server load.

This document describes how to configure and run MonAMI: a universal sensor infrastructure. Following the Unix philosophy, it aims to do a simple job well. That job is to move monitoring information from a service into a monitoring system. It does not attempt to store monitoring information or display (graphically) the data, as other systems exist that already do this. Rather, it aims to interface well with existing software.

To understand how MonAMI may be configured, a brief introduction to the underlying ideas of MonAMI must be given. This introduction chapter will give an overview of how MonAMI allows monitoring information to flow. This is important as later chapters (which describe specific aspects of MonAMI) may be confusing without a clear understanding of the “big picture.”

It is worth stressing at this stage that monitoring is a more involved process than merely periodically collecting data. Without a clear understanding of this, MonAMI may appear superfluous!

In essence, MonAMI allows the collection of information from one or more services. This information is then sent off, perhaps to some data storage or to be displayed within some monitoring software. This gathering of information can be triggered by MonAMI internally or from an external agent, depending on how MonAMI is configured.

MonAMI has two parts: a core infrastructure and a set of plugins. Plugins do the more immediately useful activity, such as collecting information and sending the information somewhere. There are broadly two classes of plugins: monitoring plugins and reporting plugins.

Monitoring plugins can collect information from a specific source; for example, the MySQL plugin (described in Section 3.4.8, “MySQL”) can collect the current performance of a MySQL database. A configured monitoring plugin will act as a source of monitoring information.

Reporting plugins store gathered information or send it to some monitoring system. For example, the filelog plugin (described in Section 3.5.1, “filelog”) will store information as a single line within a log file, each line starting with the appropriate date/time stamp information. Another example is the Ganglia plugin (see Section 3.5.3, “Ganglia”), which sends network packets containing the information so that an existing Ganglia monitoring system can display the information. A configured reporting plugin will act as a sink of information.

A target is a configured instance of a plugin, one that is monitoring something specific or sending information to a specific information system. MonAMI can be configured so it has many MySQL targets, each monitoring target monitoring a different MySQL database server. Another example is when the filelog plugin is used to log data to different files. Although there is only ever one filelog plugin, there are many filelog targets, one per file.

MonAMI-core provides the infrastructure that allows gathered information (provided by monitoring plugins) to be sent to reporting plugins (which send the information off to where it is needed). MonAMI-core also handles internal bookkeeping and the functionality common between plugins, such as reading configuration files and caching results.

Several useful plugins (both monitoring and reporting) are

included with the default distribution. However, MonAMI aims to

be extensible. Writing a new monitoring plugin allows data to

be sent to any of the existing reporting plugins; writing a new

reporting plugin allows any of the MonAMI data to be sent to a

new storage or monitoring system. Instructions on how to write

new plugins are given in the developers guide (the file

README.developers).

A monitoring agent (such as MonAMI) is charged with the job of marshalling information from one or more systems (usually local to the agent) to some other system (often remote). Whether we are monitoring a database for performance problems, keeping a watchful eye on missing web pages or plotting a graph to see how many users are logged in over time, all monitoring activity can be understood as consisting of three abstract components: the monitoring target, the monitoring agent and the information system. In this context, the monitoring agent is MonAMI. The monitoring target might be a database, webserver or the operating system's current-user information. The information system might be a log file, web page or some distributed monitoring system, such as Ganglia (Section 3.5.3, “Ganglia”) or Nagios (Section 3.5.9, “Nagios”).

Unlike mechanical monitoring systems (see, for example, the Watt governor), computers work in discrete units of time. In a multitasking operating system any monitoring activity must be triggered by something outside the monitoring system. From the three components, we can describe three monitoring flows based on which component triggered the monitoring activity. If the information system triggered the monitoring activity, the monitoring is on-demand; monitoring that is triggered within the agent (i.e. triggered internally within MonAMI) is internally-triggered; if the service triggered the monitoring, due to some service-related activity, the monitoring is event-based.

Internally-triggered monitoring is perhaps the most common. An example of internally-triggered monitoring is periodically asking for (and recording somewhere) the current status of some service. We might ask an Apache web-server the current activity of its threads; we might ask a MySQL database how many times its query-cache has been useful. These questions can be asked at any time. Typically the values are queried at regular intervals and the results are plotted on a graph.

With on-demand monitoring, some external agent asks for data. An example of on-demand monitoring would be a web-page that returned the current status of a database: the information could be gathered only when queried by a user. The external agent can request information at any time, although in practice, requests are often periodic. A major benefit of on-demand monitoring flows is that it allows the monitoring requirements to vary dynamically as greater or lesser volume of information is requested. A potential problem with on-demand monitoring is with requests overloading the monitoring system. MonAMI provides some protection against this by allowing the enforcement of a caching policy (see Section 3.3.2, “The cache attribute”).

The third monitoring flow is event-based monitoring. Events are triggered by the monitoring target (e.g., the Apache server). The target (such as an Apache server) sends information voluntarily at an unpredictable time, usually due to something happening externally. Event monitoring flow is most often used to record that “something” has happened; for example that a web download has completed.

A plugin that supports event-based monitoring flow makes the

events available in different channels.

One can subscribe to one or more channels. Information from

that channel is then sent to one or more reporting targets. For

example, the Apache web-server monitoring plugin (see Section 3.4.2, “Apache”) can produce an event for each attempted

HTTP transfer (whether successful or not) as the

access channel, so subscribing to the

apache target's access channel would

provide information on all transfers. A subscription can be

more more specific: the channel access.4xx

provides information on only those transfers resulting in an

error status-code, and subscribing to

access.4xx.404 will report on only missing

page (status-code 404) events.

Explicit examples of each of the three event flows are given in

Section 3.8, “Example configurations”. Although the examples rely on an

understanding of the monami.conf format

(which Chapter 3, Configuring MonAMI documents in detail), the

examples (along with the accompanying notes) should be fairly

obvious.

When monitoring something (a service, for example) it is rare that the current status is described by a single metric. Although you might only want a tiny subset of the available information, the current status is usually described by a vast slew of data. We want a convenient concept that allows the data to be grouped together, allowing easy selection of the interesting subsets.

A datatree is a collection of related information. As the name suggests, the metrics are held in a tree structure, analogous to a filesystem. A datatree has branches (like “directories” or “folders”) each of which contains measurements (like files) and further branches. In general, branches are generic concepts and the data underneath the branches are measurements of the generic concept.

A typical datatree is represented below. Here, the

Threads branch contains data related to the

generic concept of threads, each of which might be undertaking

one of several different activites. The data underneath the

Threads branch (waiting,

starting, etc.) are the number of threads in

the respective state (“waiting for a connection”,

“starting up”, etc..)

Apache

|

+--Workers

| |

| +--busy: 1

| |

| +--idle: 49

|

+--Threads

|

+--waiting: 49

|

+--starting: 0

|

+--reading: 0

|

+--replying: 1

|

+--keep-alive: 0

|

+--dns: 0

|

+--closing: 0

|

+--logging: 0

|

+--graceful exit: 0

|

+--idle: 0

|

+--unused: 0

Each item of data is usually referred to by its full path

separated by periods (.), excluding the root

node. For example, the number of Apache threads currently

replying with requested information is

Threads.replying. In the above example,

Threads.replying has a value of

1.

Each metric has multiple elements of metadata. They all have a

name (e.g., Threads.replying), a value

(1 for Threads.replying

in above example), a type (integer, floating-point number,

string, etc...), a string describing in what units the

measurement was taken (with numerical data and where

appropriate) and some additional binary metadata such as

whether the information is static, a counter or reflects

current status.

Datatrees can be combined to form larger datatrees; or subtrees can be selected, limiting the information delivered. Details of how to do this are given in Section 3.6.1, “The read attribute”.

Table of Contents

In this section, the various modes of running MonAMI are discussed. In most production environments, MonAMI runs as a single detached process (a daemon), launched from the system start-up scripts (the init scripts), as described in Section 2.3, “Running in production environment”. Other modes of running monamid, such as testing a new configuration, are also discussed.

The MonAMI application (monamid)

accepts only a limited number of options as most of the

behaviour is controlled by the configuration file

(/home/paul/MonAMI-test-install/etc/monami.conf). The format of

this configuration file is described in a later section of this

guide (Chapter 3, Configuring MonAMI).

The following options are available for the monamid application.

monamid [-f] [-h] [-v] [-V] [--pid-file file]

-for--no-daemonrun in the foreground, i.e. do not detach from current terminal. Unless explicitly configured in

monami.conf, logging output will be sent tostdoutorstderr.-hor--helpdisplay a brief synopsis of available options.

-vor--verboseshow more of the logging information. MonAMI aims to be a quiet application. By default it will only report problems that are from extern resources or that are due to configuration that is inconsistent. With the

-voption specified extra information is reported that, whilst not necessarily reporting an error, is indicative of potentially abnormal activity. This is often useful when MonAMI is not behaving as expected.This option can be repeated to include extra debugging information; information useful when tracking down programming problems within MonAMI.

-Vor--versiondisplay the version of MonAMI and exit.

--pid-filefilestore the PID of monamid in

file, creatingfileif it does not already exist.

Without the -f option, the MonAMI application

(monamid) will assume it is running

in a production environment and will detach itself from the

terminal. The init scripts for

starting MonAMI also make this assumption, and run

monamid without the

-f option.

When first using MonAMI, or when investigating a new

configuration, it is often easier to understand any problems if

the application does not detach from the terminal and continues

to display output to the terminal. When experimenting, it is

recommended to run MonAMI with the -f

(foreground) and -v (verbose) command-line

options. As with other command-line options these can be

combined, so to test-run MonAMI, one can use the following:

/usr/bin/monamid -fv.

In normal operation, MonAMI will detach itself and run

independently as a background task. Typically, one would want

to run MonAMI automatically when a computer starts up. The de

facto method of achieving this is with

init scripts. MonAMI includes a

suitable script, which is stored in the

/home/paul/MonAMI-test-install/etc/init.d directory.

When installing MonAMI (either with the RPM package or manually

with "make install") a suitable "init script" will be installed

in the /home/paul/MonAMI-test-install/etc/init.d directory. Once this is

done, a further two steps are needed: to register the new init

script with the system and "switch on" MonAMI. On RedHat-like

machines, this is achieved with the following two commands:

chkconfig monami on

To manually start or stop MonAMI, one can use the init scripts,

with either the start or stop

option. You can either execute the script directly:

/etc/init.d/monami start

or using the "service" command.

service monami start

The complete list of arguments the init script accepts is:

startUnconditionally attempt to start monamid. If monamid is already running, this attempt will fail.

stopUnconditionally stops monamid. If the application is not already running, then this will (obviously) fail.

reloadSignals MonAMI to reload its configuration. This will only happen if monamid is running: if the application is not running, this will fail. The reload is achieved without stopping and starting monamid.

restartUnconditionally stop and start MonAMI. If monamid was not running, an error is reported and the application is started.

condrestartIf MonAMI is running, then stop monamid and restart it. If the application is not running, then no action is taken.

Finally, as an aid to development work, one can run MonAMI from within the CVS tree.

With the configuration, if MonAMI fails to find the configuration

file in the configured location

(/home/paul/MonAMI-test-install/etc/monami.conf), it will look for

monami.conf within the current working

directory.

For plugins, MonAMI will first look in the configured plugin

directory (/home/paul/MonAMI-test-install/lib/monami). If this directory

does not exist, or contains no plugins, then the

plugin directory within the current directory

is examined. The src/plugin directory is

where plugins are placed as they are built.

MonAMI will run within CVS provided that the "current working

directory" is src and the CVS-configured

MonAMI does not share the same prefix as an installed MonAMI

instance. It is recommended not to run an installed MonAMI on a

development machine and to use the -f

command-line option when running

monamid from the CVS directory tree.

Table of Contents

MonAMI looks for the configuration file

monami.conf. It will first look for this

file in the /home/paul/MonAMI-test-install/etc

directory. If monami.conf is not found

there, the program will check the current directory. If the

configuration file still cannot be found, MonAMI will exit with

error code 1.

The configuration file can describe four things:

configuration for MonAMI, independent of specific monitoring,

which services need monitoring (the monitoring targets) and how to get that information,

where information should be sent (the reporting targets),

how data should flow from the monitoring targets to the reporting target.

As will be discussed later, it is possible to split parts of MonAMI configuration into different files. This allows a set of monitoring definitions to be stated independently of other monitoring activity, which may prove useful when MonAMI is satisfying multiple groups requiring monitoring of services.

Comments can be included by starting a line with the hash

(#) symbol. White space (consisting of space or

tab characters) before the hash symbol is allowed in comment

lines.

Each configuration file is split into multiple stanzas (or sections). Each stanza has a section title line followed by zero or more attribute lines.

A section title is a line containing a word in square brackets

("[mysql]" for example). The case used for the

section title does not matter: "[MySQL]",

"[mysql]" and "[mySQL]" can be used

interchangeably.

All lines following a section title line until the next section

title line (or the end of the file) must be either a blank line,

a comment line or an attribute line. Attribute lines are

keyword-value pairs separated by an equals symbol

("="), for example:

name = myMonitor

White space at the start of the line, either side of the equals symbol and at the end of the line is ignored. Other white space, if significant, is preserved.

If a line ends with a back-slash symbol ("\") then

that line and the one following it are combined into a single

line. This can be repeated, allowing a single very long line to

be broken into several shorter (and more manageable) lines; each

of the shorter lines, except the last one, must end with a

back-slash symbol.

Example configuration files are include in Section 3.8, “Example configurations”.

The following sections describe the different sections that may appear in a configuration file, along with the valid assignment lines that further refine MonAMI behaviour.

One one stanza entitled "monami" is allowed:

subsequent monami stanzas will be silently ignored.

The MonAMI section describes how MonAMI-core should run.

MonAMI provides messages containing information about events

that occur during runtime. The destination of these messages

is controlled by a set of configuration parameters that all

begin with "log".

Each message has a severity; the four severity levels are:

- critical

no further execution is possible, MonAMI will stop immediately.

- error

something went wrong. It is possible to continue running but with (potentially) reduced functionality. Errors might be rectified by altering MonAMI configuration.

- info

a message that, whilst not indicating that there was an error, is part of a limited commentary that might be useful in deciphering apparently bizarre behaviour.

- debug

a message that is useful in determining why some internal inconsistency has arisen. The information provided is tediously verbose and only likely of use when finding problems within the MonAMI program and plugins.

The destination of messages (and whether certain messages

are ignored) can be configured on the command line, or

within the [monami] section of the

configuration file.

Normally, a user is only interested in "critical" and

"error" messages. If MonAMI is not working correctly, then

examining the messages with "info" severity might provide a

clue. Supplying the -v command-line option

tells MonAMI to return info messages.

If MonAMI is running as a normal process (using the

-f option), then critical and error

messages are sent to standard error (stderr) and other message

severity levels are ignored. If MonAMI is running verbosely

(using the -v option) then info messages

are sent to standard output (stdout), if running more

verbosely (with -vv) then the debug

messages are also sent to stdout.

If MonAMI is running as a daemon (i.e. without the

-f command-line option) then, by default,

critical and error messages are sent to syslog (using the

"daemon" facility), info is ignored (unless running with the

verbose option: -v) and debug is ignored

(unless running more verbosely: -vv). Any

messages generated before MonAMI has detached itself are

either sent to stdout, stderr or ignored.

Other destinations are defined as follows:

- An absolute file location (i.e. beginning with

"

/") This is treated as a file destination. The message is appended to the file, creating the file if necessary.

- syslog

indicates the message should be sent to syslog daemon facility.

- ignore

indicates the message should be ignored.

- stderr

sends the message to standard-error output.

- stdout

sends the message to standard output.

Some examples:

[monami] # ignore all but critical errors log = ignore log_critical = syslog

[monami] # store critical and error messages in separate files log = ignore log_critical = /var/log/monami/critical.log log_error = /var/log/monami/error.log

MonAMI needs no special privileges to run. In common with other applications, it is possible that some bug in MonAMI be exploitable and allow a local (or worse, remote) user to compromise the local system. To reduce the impact of this, it is common for an application to “drop” their elevated privileges (if running with any) soon after they start.

There are two options within the configuration file to control this: user and group. The user option tells MonAMI to switch its user-ID to that of the supplied user and to switch group-ID to the default group for that user. The group option overrides the user's default group, with MonAMI adopting the group-ID specified.

In the following example, the [monami] stanza

tells MonAMI to drop root privileges and assume the

identity of user monami and group monitors.

[monami] user = monami group = monitors

Often, a server may have multiple services running concurrently. Maintaining a monolithic configuration file containing the different monitoring requirements may be difficult as services are added or removed.

To get around this problem, MonAMI will load all the

configuration files that end .conf

within a named directory (/home/paul/MonAMI-test-install/etc/monami.d). If a

new service has been installed, additional monitoring can be

indicated by copying a suitable file into the /home/paul/MonAMI-test-install/etc/monami.d

directory. When the service has been removed the

corresponding file in /home/paul/MonAMI-test-install/etc/monami.d can be

deleted.

Auxiliary configuration directories are specified with the

config_dir option. This option can occur

multiple times in a [monami] stanza. For

example:

[monami] config_dir = /etc/monami.d

Summary of possible attributes within the

[monami] stanza:

- log, string, optional

change the default destination for all message severity levels. This overwrites the built-in default behaviour, but is overwritten by any severity-specific options.

- log_critical, string, optional

change the destination for critical messages. This overwrites any other destination option for critical messages.

- log_error, string, optional

change the destination for error messages. This overwrites any other destination option for error messages.

- log_info, string, optional

change the destination for info messages. This overwrites any other destination option for info messages.

- log_debug, string, optional

change the destination for debugging messages. This overwrites any other destination option for debug messages.

- user, string, optional

The user-name or user-id of the account MonAMI should use. By default, MonAMI will also adopt the corresponding group ID.

- group, string, optional

The group-name or group-id of the group MonAMI should use. This will override the group ID corresponding to the user option.

- config_dir, string, optional

A directory that contains additional configuration files. Each file ending

.confis read and processed, but anymonamistanzas are ignored. Its recommended that this directory be only readable by the user account that MonAMI will run under.

There are some features that are common to each of the plugins. Rather than repeat the same information under each plugin's description, the information is presented here.

Each distinct service has a separate stanza within the

configuration file, using the plugin name. Considering the

apache monitoring plugin (which monitors an Apache

HTTP webserver) as an example, one can monitor multiple

Apache webservers with several separate

[apache] stanzas: one for each monitoring

target. To illustrate this, the following configuration

describes how to monitor an intranet web server and an

external web server.

[apache] name = external-webserver host = www.example.org [apache] name = internal-webserver host = www.intranet.example.org

Each target must have a unique name. It is possible to specify the name a target will adopt with the name attribute (as in the above example). If no name attribute is given, the target take the name of the plugin by default. However, since all names must be unique, only one target can adopt the default name: all subsequent targets (from this plugin) must have their name specified explicitly using the name attribute.

Although specifying a name is optional, it is often useful to set a name explicitly (preferably to something meaningful). Simple configuration files will work fine without explicitly specifying target names, whilst configuration files describing more complex monitoring requirements will likely fail unless they have explicitly named targets.

If there is an ambiguity (due to different targets having the same name) MonAMI will attempt to monitor as much as possible (to “degrade gracefully”) but some loss of functionality is inevitable.

Acquiring the current status of a service will inevitably take resources (such as CPU time and perhaps disk space) away from the service. For some services this effort is minimal, for others it is more substantial. Whatever the burden, there will be some monitoring frequency above which monitoring will impact strongly on service provision.

To prevent overloading a service, the results from querying a service are stored within MonAMI for a period. If there is a subsequent request for the current state of the target within that period then the stored results are used rather than directly querying the underlying service: the results are cached.

The cache retention period is adjustable for each target and can be set with the cache attribute. The cache attribute value is the time for which data is retained, or (equivalently) the guaranteed minimum time between successive queries to the underlying service.

The value is specified using the standard time-interval

notation: one or more numbers each followed by a single

letter modifier. The modifiers are s,

m, h and

d for seconds, minutes, hours and days

respectively. If a qualifier is omitted, seconds is

assumed. The total cache retention period is the sum of the

time. For example 5m 10s is five minutes

and ten seconds and is equivalent to specifying

310.

In the following example configuration file, the MySQL queries are cached for a minute whilst the Apache queries are cached for 2 seconds:

[apache] host = www.example.org cache = 2 [mysql] host = mysql-serv.example.org user = monami password = monami-secret cache = 1m

If no cache retention period is specified, a default value of one second is used. Since MonAMI operates at the granularity of one second, there is apparently no effect on individual monitoring activity, yet we ensure that targets are queried no more often than once a second.

For many services, a one second cache retention time is too short and the cached data should be retained for longer; yet if the cache retention time is set for too long, transitory behaviour will not be detectable. A balance must be struck, which (most likely) will need some experimentation.

The map attribute describes how additional information is to be added to an incoming datatree. When a datatree is sent to a target that has one or more map attributes it is first processed to alter the incoming datatree. To the target, the additional metrics provided by map attributes are indistinguishable from those of the original datatree.

The map attribute values take the following form:

map =target metric:source

The value of target

metric.) within target

metric

The source

String-literals are a string metric

that never change: they have a fixed value independent of

any monitoring activity. A string-literal starts and ends

with a double-quote symbol (") and can

have any content in between. Since MonAMI aims at providing

monitoring information, the use of string literals is

discouraged.

A special is something that provides

some very basic information about the computer: sufficiently

basic that providing the information via a plugin is

unnecessary. A special is represented by its name contained

in angle-brackets (< and

>). The following specials are

available:

FQDNthe Fully Qualified Domain Name of the machine. This is the full DNS name of the computer; for example,

www.example.org.

The follow simple, stand-alone MonAMI configuration illustrates map attributes.

[null]

[sample]

read = null

write = snapshot

interval = 1

[snapshot]

filename = /tmp/monami-snapshot

map = tests.string-literal.first : "this is a string-literal"

map = tests.special.fqdn : <FQDN>

map = tests.string-literal.second : "this is also a \

string-literal"

The null plugin (see Section 3.4.9, “null”)

produces datatrees with no data. Without the

map attributes, the snapshot would

produce an empty file at

/tmp/monami-snapshot. The

map attributes add additional metrics

to otherwise-empty datatrees. This is reflected in the

contents of /tmp/monami-snapshot.

The process of gathering monitoring data from a service is not instantaneous. In general, there will be a delay between MonAMI requesting the data and it receiving that data. The length of this delay may depend on several factors, but is likely to depend strongly on the software being monitored and how busy is the server.

Whenever MonAMI receives data, it makes a note of how long this data-gathering took. MonAMI uses this information to maintain an estimate for the time needed for the next request for data from this monitoring target.

This estimate is available to all plugins, but currently

only two use it: ganglia and

sample. The ganglia

plugin passes this information on to Ganglia as the

dmax value (see Section 3.5.3, “dmax”)

and the sample plugin uses this information to

achieve adaptive monitoring (see Section 3.6.4, “Adaptive monitoring”).

When maintaining an estimate of the next data-gathering delay, MonAMI takes a somewhat pessimistic view. It assumes that data-gathering will take as long as the longest observed delay, unless there is strong evidence that the situation has improved. If gathering data took longer than the current estimate, the estimate is increased correspondingly. If a service becomes sufficiently loaded (e.g., due to increase user activity) so that the observed data-gathering delay increases, MonAMI will adjust its estimate to match.

If data-gathering takes less time than the current estimated value, the current estimate is not automatically decreased. Instead, MonAMI waits to see if the lower value is reliable, and that the delay has stabilised at the lower value. Once it is reasonably sure of this, MonAMI will reduce its estimate for future data-gathering delays.

To determine when the delay has stabilised, MonAMI keeps a history of previous data-gathering delay values. The history is stored as several discrete intervals, each with the same minimum duration. By default, there are ten history intervals each with a one minute minimum duration, giving MonAMI a view of recent history going back at least ten minutes.

Each interval has only one associated value: the maximum observed delay during that interval. At all times, there is an interval called the current interval. Only the current interval is updated, the other intervals provide historical context. As data is gathered the maximum observed delay for the current interval is updated.

When the current interval has existed for more than the minimum duration (one minute, by default), all the intervals moved: the current history interval becomes the first non-current history interval, what was the first non-current interval becomes the second, and so on. The information in the last history interval is dropped and a new current interval is created. Future data-gathering delays are recorded in this new current interval until the minimum interval has elapsed and the intervals moved again.

MonAMI takes two statistical measures of the history intervals: the maximum value and the average absolute deviation (or average deviation for short). The maximum value is the proposed new value for the estimated delay, if it is lower, and the absolute deviation is used to determine if the change is significant.

Broadly speaking, the average deviation describes how settled the data stored in the historic intervals are over the recent history: a low number implies data-taking delays are more predictable, a high number indicates they are less predicable. MonAMI only reduces the estimate for future delays if the difference (between current estimate value and the maximum over all historic intervals) is significant. It is significant if the ratio between the proposed drop in delay and the average deviation exceeds a certain threshold value.

In summary, to reduce the estimate of future delays, the observed delay must be persistently low over the recorded history (minimum of 10 minutes, by default). If the delay is temporarily low, is decreasing over time or fluctuates, the estimate is not reduced.

There are two attributes that affect how MonAMI determines

its estimate. The default values should be sufficient under

most circumstances. Moreover, there are separate attributes

for adjusting the behaviour both of adaptive monitoring (see

Section 3.6.5, “Sample attributes”), and the

dmax value of Ganglia (see Section 3.5.3, “Attributes”). Adjusting these attributes

may be more appropriate.

Attributes

- md_intervals integer, optional

the number of historic intervals to consider. The default is 10 and the value must be between 2 and 30. Increased number of intervals results in more stringent requirement needed before the estimate is reduced. It also increases the accuracy of the average deviation measurements.

Having a small number of intervals (less then 5, say) is not recommended as the statistics becomes less reliable.

A large number of intervals gives more reliable statistical results, but the system will take longer to react (to reduce the delay estimate) to changing situations. Perhaps this is most noticeable if there is a single data-gathering delay that is unusually long. If this happens, MonAMI will take at least the md_intervals times the minimum delay to reduce the delay estimate.

- md_duration integer, optional

The minimum duration, in seconds, for an interval. The default is 60 seconds and the value must be between 1 second and 1200 seconds (20 minutes).

Each interval must have at least one data point: an observation of the data-gathering delay. To ensure this, the value of md_duration is implemented as a minimum duration and, in practise, the intervals can be longer. For example, with the default configuration (md_duration of one minute, md_intervals of 10) if only a single monitoring flow is established that gathers data from a monitoring target every 90 seconds, each interval will have a 90 second duration and complete history will be 15 minute.

This section describes the different services that can be monitored (for example, a MySQL database or an Apache webserver). It gives brief introductions to which services the plugins can monitor and how they can be configured. Wherever possible, sensible defaults are available so often little or no configuration is required for common deployment scenarios.

The available monitoring plugins depend on which plugins have been built and installed. If you have received this document as part of a binary distribution, it is possible that the distribution does not include all the plugins described here. It might also contain other plugins provided independently from the main MonAMI release.

AMGA (ARDA Metadata Catalogue Project) is a metadata server provided by the ARDA/EGEE project as part of their gLite software releases. It provides additional metadata functionality by wrapping an underlying database storage. More information about AMGA is available from the AMGA project page.

The amga monitoring plugin will monitor the server's database connection usage and the number of incoming connections. For both, the current value and configured maximum permitted are monitored.

Attributes

- host string, optional

the host on which the AMGA server is running. The default value is

localhost.- port integer, optional

the port on which the AMGA server listens. The default value is 8822.

The Apache HTTP (or web) server is perhaps the most well known project from the Apache Software Foundation. Since April 1996, the Netcraft web survey has shown it to be the most popular on the Internet. More details can be found at the Apache home page.

The apache plugin monitors the current status of an Apache HTTP server. It can also provide event-based monitoring, based on various log files.

The Apache server monitoring is achieved by downloading the

server-status page (provided by the mod_status Apache plugin) and

parsing the output. Usually, this option is available within the

Apache configuration, but commented-out by default (depending on

the distribution). The location of the Apache configuration is

Apache-version and OS specific, but is usually found in either the

/etc/apache, /etc/apache2 or /etc/httpd directory. To enable the

server-status page, uncomment the section or add lines within the

apache configuration that look like:

<Location /server-status>

SetHandler server-status

Order deny,allow

Deny from all

Allow from .example.com

</Location>

Here .example.com is an illustration of how to limit

access to this page. You should change this to either your DNS

domain or explicitly to the machine on which you are to run

MonAMI.

There is an ExtendedStatus option that configures Apache to include some additional information. This is controlled within the Apache configuration by lines similar to:

<IfModule mod_status.c> ExtendedStatus On </IfModule>

Switching on the extended status should not greatly affect the server's load and provides some additional information. MonAMI can understand this extra information, so it is recommended to switch on this ExtendedStatus option.

Event-based monitoring

Event-based monitoring is made available by watching log files. Any time the Apache server writes to a watched log file, an event is generated. The plugin supports multiple event channels, allowing support for multi-homed servers that log events to different log files.

Event channels are specified by log attributes. This can be repeated to configure multiple event channels. Each log attribute has a corresponding value like:

name:path[type]

where:

nameis an arbitrary name given to this channel. It cannot have a colon (

:) and should not have a dot (.) but most names are valid.pathis the path to the file. Log rotations (where a log file is archived and a new one created) are supported.

typeis either

combined, orerror.

The following example configures the access

channel to read the log file

/var/log/apache2/access.log, which is in the

Apache standard “combined” format.

[apache] log = access: /var/log/apache2/access.log [combined]

Attributes

- host string, optional

the hostname for webserver to monitor. The default value is

localhost.- port integer, optional

the port on which the webserver listens. The default value is 80

- log string, zero or more

specifies an event monitoring channel. Each log attribute has a value like:

name:path[type]

dCache (see dCache home page) is a system jointly developed by Deutsches Elektronen-Synchrotron (DESY) and Fermilab that aims to provide a mechanism for storing and retrieving huge amounts of data among a large number of heterogeneous server nodes, which can be of varying architectures (x86, ia32, ia64). It provides a single namespace view of all of the files that it manages and allows access to these files using a variety of protocols, including SRM, GridFTP, dCap and xroot. By connecting dCache to a tape storage backend, it becomes a hierarchical storage manager (HSM).

Authentication

The dCache monitoring plugin works by connecting to the underlying PostGreSQL database that dCache uses to store the current system state. To achieve this, MonAMI must have the credentials (a username and password) to log into the database and perform read queries.

If you do not already have a read-only account, you will need to create such an account. It is strongly recommended not to use an account with any write privileges as the password will be stored plain-text within the MonAMI configuration file (see Section 4.2.2, “Passwords being stored insecurely”).

To configure PostGreSQL, SQL commands need to be sent to the database server. To achieve this, you will need to use the psql command, connecting to the dcache database. On many systems you must log in as the database user “postgres”, which often has no password when connecting from the same machine on which database server is running. A suitable command is:

psql -U postgres -d dcache

The following SQL commands will create an account

monamimonami-secret

Important

Please ensure you change the example password

(monami-secret

CREATE USERmonami; ALTER USERmonamiPASSWORD 'monami-secret'; GRANT SELECT ON TABLE copyfilerequests_b TOmonami; GRANT SELECT ON TABLE getfilerequests_b TOmonami; GRANT SELECT ON TABLE putfilerequests_b TOmonami;

If you intend to monitor the database remotely, you may need to

add an extra entry in PostGreSQL's remote access file:

pg_hba.conf. With some distribution, this

file is located in the directory

/var/lib/pgsql/data.

Currently, the information gathered is limited to the rate of SRM

GET, PUT and COPY requests received. This information is gathered

from the copyfilerequests_b,

getfilerequests_b and putfilerequests_b tables. Future

versions of MonAMI may read other tables, so requiring additional

GRANT statements.

Attributes

- host string, optional

the host on which the PostGreSQL database is running. The default is

localhost.- ipaddr string, optional

the IP address of the host on which the database is running. This is useful when the host is on multiple IP subnets and a specific one must be used. The default is to look up the IP address from the host.

- port integer, optional

the

TCPport to use when connecting to the database. The default is port 5432 (the standard PostGreSQL port).- user string, optional

the username to use when connecting to the database. The default is the username of the system account MonAMI is running under. When running as a daemon from a standard RPM-based installation, the default user will be

monami.- password string, optional

the password to use when authenticating. The default is to attempt password-less login to the database.

Disk Pool Manager (DPM) is a service that implements the SRM protocol (mainly for remote access) and rfio protocol (for site-local access). It is an easy-to-deploy solution that can support multiple disk servers but has no support for tape/mass-storage systems. More information on DPM can be found at the DPM home page.

The dpm plugin connects to the MySQL server DPM uses. By querying this database, information is extracted such as the status of the filesystems and the used and available space. The space statistics are available as a summary, and broken down for each group, and for each filesystem. The daemon activity on the head node can also be monitored.

Authentication

This plugin requires read-only privileges for the database DPM

uses. The following set of SQL statements creates login

credentials with username of

monamiusermonamipass

GRANT SELECT ON cns_db.* TO 'monamiuser'@'localhost' IDENTIFIED BY 'monamipass'; GRANT SELECT ON dpm_db.* TO 'monamiuser'@'localhost' IDENTIFIED BY 'monamipass';

If MonAMI is to monitor the MySQL database remotely, the following SQL can be used to create login credentials

GRANT SELECT ON cns_db.* TO 'monamiuser'@'%' IDENTIFIED BY 'monamipass'; GRANT SELECT ON dpm_db.* TO 'monamiuser'@'%' IDENTIFIED BY 'monamipass';

If local and remote access to the MonAMI database is needed all four above SQL commands should be combined.

Attributes

- host string, optional

the host on which the MySQL server is running. Default is

localhost.- user string, required

the username with which to log into the server.

- password string, required

the password with which to log into the server.

The filesystem plugin monitors generic (i.e., non-filesystem specific) features of a mounted filesystem. It reports both capacity and “file” statistics. The “file” statistics correspond to inode usage for filesystems that use inodes (such as ext2).

Note

With both reported resources (blocks and files), there are similar-sounding metrics: “free” and “available”. “free” refers to total resource potentially available and “available” refers to the resource available to general (non-root) users.

The difference between the two comes about because it is common to reserve some capacity for the root user. This allows core system services to continue when a partition is full: normal users cannot create files but root (and processes running as root) can.

Attributes

- location string, required

the absolute path to any file on the filesystem.

The Globus Alliance distribute a modified version of the WU-FTP client that has been patched to allow GSI-based authentication and multiple streams. This is often referred to as “GridFTP”.

Various grid components use GridFTP as an underlying transfer mechanism. Often, these have the same log-file format for recording transfers, so parsing this log-file is a common requirement.

The gridftp plugin monitors GridFTP log files, providing an event for each transfer. This is under the transfers channel.

Attributes

- filename string, required

the absolute path to the GridFTP log file.

On their website, Cluster Resources describe Maui as “an advanced batch scheduler with a large feature set well suited for high performance computing (HPC) platforms”. Within a cluster it is used to decide which job (of many that are available) should be run next. Maui provides sophisticated scheduling features such as advanced fair-share definitions and “allocation bank”. More details are available within the Maui homepage.

Access control

The MonAMI maui plugin will need sufficient access rights

to query the Maui server. If MonAMI is running on the same

machine as the Maui server, (most likely) no additional host will

be needed. If MonAMI is running on a remote machine, then

access-right must be granted for that machine. Append the remote

host's hostname to the space-separated ADMINHOST

list.

The plugin will also need to use a valid username. By default it

will use the name of the user it is running as (monami),

but the plugin can use an alternative username (see the

user attribute). To add an additional

username, append the username to the space-separated

ADMIN3 list.

The following example configuration shows how to configure Maui to

allow monitoring from host

monami.example.orgmonami

SERVERHOST maui-server.example.org ADMIN1 root ADMIN3monamiADMINHOST maui-server.example.orgmonami.example.orgRMCFG[base] TYPE=PBS SERVERPORT 40559 SERVERMODE NORMAL

Password

The Maui authenticates by the client and server keeping a shared secret: a password. Currently this password must be integer number. Unfortunately, the password is decided as part of the Maui build process. If one is not explicitly specified, a random number is selected as the password. The password is then embedded within the Maui client programs and used when they communicate with the Maui server. Currently, it is not possible to configure the Maui server to use an alternative password without rebuilding the Maui client and servers.

To communicate with the Maui server the maui plugin must know the password. Unfortunately, as the password is only stored within the executables, it is difficult to discover. The maui plugin has heuristics that allow it to scan a Maui client program and, in most cases, discover the password. This requires a Maui client program to be present on whichever computer MonAMI is running. If the Maui client is in a non-standard location, its absolute path can be specified with the exec attribute.

If the password is known (for example, its value was specified when compiling Maui) then it can be specified using the password attribute. Specifying the password attribute will stop MonAMI from scanning Maui client programs.

Once the password is known, it can be stored in the MonAMI configuration using the password attribute. This removes the need for a Maui client program. However, should the Maui binaries change (for example, upgrading an installed Maui package), it is likely that the password will also change. This would stop the MonAMI plugin from working until the new password was supplied.

The recommended deployment strategy is to install MonAMI on the Maui server and allow the maui plugin to scan the Maui client programs for the required password.

Time synchronisation

When communicating between the maui and Maui server, both parties want to know that the messages are really from the other party. The shared-secret is one part of this process, another is to check the time within the message. This is to prevent a malicious third-party from sending messages that have already been sent: a “replay attack”.

To prevent these replay attacks, the clocks on the Maui server and the server MonAMI is running must agree. If both machines are well configured, their clocks will agree with ~10 millisecond difference. Since the network may introduce a slight delay, some tolerance is needed.

The maui plugin requires an agreement of one second by default. This should be easy to satisfied with modern networks. If, for whatever reason, this is not possible the tolerance can be make more lax by specifying the max_time_delta attribute.

Note

Should there be a systematic error between the clocks on two servers, effort should be made in synchronosing those clocks. Increasing the max_time_delta makes MonAMI more vulnerable to replay attacks.

Attributes

- host string, optional

the hostname of the Maui server. If not specified,

localhostwill be used.- port integer, optional

the

TCPport to which the plugin with connect. If not specified, the default value is 40559.- user string, optional

the user name to present to the Maui server when communicating. The default value is the name of the account under which MonAMI is running.

- max_time_delta integer, optional

the maximum allowed time difference, in seconds, between the server and client. The default value is one second.

- password integer, optional

the shared-secret between this plugin and the Maui server. The default policy is to attempt to discover the password automatically. Specifying the password will prevent attempts at discovering it automatically.

- timeout string, optional

the time MonAMI should wait for a reply. The string is in time-interval format (e.g., “

5m 10s” is five minutes and ten seconds; “310” would be equivalent). The default behaviour is to wait indefinitely.- exec string, optional

the absolute path to the mclient (or similar) Maui client program. If the plugin was unsuccessful scanning the program given by

execit will also try standard locations.

This plugin monitors the performance of a MySQL database. MySQL is a commonly used Free (GPLed) database. The parent company (MySQL AB) describe it as “the world's most popular open source database”. For more information, please see the MySQL home page

The statistics monitored are taken from the status variables.

They are acquired by executing the MySQL SQL SHOW

STATUS;. The raw variables are described in the MySQL

manual, section 5.2.5: Status

Variables.

Note

The metrics names provided by MySQL are in a flat namespace. These names are not used by MonAMI; instead, the metrics are mapped into a tree structure, allowing more easy navigation of, and section from, the available metrics.

Privileges

To function, this plugin requires an account to access the

database. Please note: this database account requires no database

access privileges, only that the username and password will allow

MonAMI to connect to the MySQL database. For security

considerations, you should not employ login

credentials used elsewhere (and never root or similar

power-user). The following is a suitable SQL statement for

creating a username and password of

monamimonamipass

CREATE USER 'monami'@'localhost' IDENTIFIED BY "monamipass";

Sharing login credentials is not recommended. If you decide to

share credentials make sure the MonAMI configuration file is

readable only by the monami user (see Section 3.2.2, “Dropping root

privileges”).

Note

In addition to monitoring a MySQL database, the mysql plugin can also store information MonAMI has gathered within MySQL. This is described in Section 3.5.8, “MySQL”.

Attributes

- user string, required

the username with which to log into the server.

- password string, required

the password with which to log into the server

- host string, optional

the host on which the MySQL server is running. If no host is specified, the default

localhostis used.

The null plugin is perhaps the simplest to understand. As a monitoring plugin, it providing an empty datatree when requested for data. The main use for null as a monitoring target is to demonstrating aspects of MonAMI without the distraction of real-life effects from other monitoring plugins.

The null plugin will supply an empty datatree. In conjunction with a reporting plugin (e.g., the snapshot), this can be used to demonstrate the map attribute for adding static content. This attribute is described in Section 3.3.3, “The map attribute”.

Delays

Another use for a null target is to investigate the effect of a service taking a variable length of time to respond with monitoring data. This is emulated by specifying a delay file. If the delayfile attribute is set, then the corresponding file is read. It should contain a single integer number. This number dictates how long (in seconds) a null target should wait when requested for data. The file can be changed at any time and the change will affect the next time the null target is read from. This is particularly useful for demonstrating how MonAMI estimates future delays (see Section 3.3.4, “Estimating future data-gathering delays”) and undertakes adaptive monitoring (see Section 3.6.4, “Adaptive monitoring”).

The following example will demonstrate this usage:

[null] delayfile=/tmp/monami-delay [sample] read = null write = null interval = 1s

Then, by changing the number stored in

/tmp/monami-delay, the delay can be adjusted

dynamically. To set the delay to three seconds, do:

$ echo 3 > /tmp/monami-delay

To remove the delay, simply set the delay to zero:

$ echo 0 > /tmp/monami-delay

Attributes

- delayfile string, optional

the filename of the delay file, the contents of which is parsed as an integer number. This number is the number of seconds the null target will delay when replying with an empty datatree.

Network UPS Tools (NUT) provides a standard method through which an Uninterruptable Power Supply (UPS) can be monitored. Part of this framework allows for signalling, so that machines can undergo a controlled shutdown in the event of a power failure. Further details of NUT are available from the NUT home page.

The MonAMI nut plugin connects to the NUT data

aggregator daemon (upsd) and queries the status of all known,

attached UPS devices. The ups.conf file must

be configured for available hardware and the startup scripts must

be configured to start the required UPS-specific monitoring

daemons.

By default, localhost will be allowed access to the upsd

daemon but access for external hosts must be added explicitly in

the upsd.conf file. See the NUT

documentation on how best to achieve this.

Attributes

- host string, optional

the host on which the NUT

upsddaemon is running. The default value islocalhost.- port integer, optional

the port on which the NUT

upsddaemon listens. The default value is 3493.

The process plugin monitors Unix processes. It can count the number of processes that match search criteria and can give detailed information on a specific process.

The information process gives should not be confused with any process, memory or thread statistics other monitoring plugins provide. Some services report their current thread, process or memory usage, which may duplicate some of the information this plugin reports (see, for example, Section 3.4.2, “Apache” and Section 3.4.8, “MySQL”). However, process reports information from the kernel and should work with any application.

The process plugin has two main types of monitors: counting processes and detailed information about a single process. A single process target can be configured to do any number of either type of monitoring and the results are combined in the resulting datatree.

Counting processes

To count the number of processes, a count

attribute must be specified. In its simplest form, the

count attribute value is simply the name of

the process to count. The following example reports the number of

imapd processes that are currently in existance.

[process] count = imapd

The format of the count attribute allows

for more sophisticated queries of form:

reported name :

proc name

[cond1,

cond2, ...]

All of the parts are optional: the part upto and including the

colon

(reported name :proc name[) can be omitted, but

at least one of the first two parts must be specified. The

examples below may help clarify this!

cond1,

cond2, ...]

To be included in the count, a process' name must match the

proc namereported nameproc name

The part in square brackets, if present, specifies some additional

constraints. The comma-separated list of key, value pairs define

additional predicates; for example, [uid=root,

state=R] means only processes that are running as

root and are in state running will be counted. The valid

conditions are:

uid =uidto be considered, the process must be running with a user ID of

uidgid =gidthe process must be running with a group ID of

gidstate =statelistthe process must have one of the states listed in

statelistRprocess is running (or ready to be run),

Ssleeping, awaiting some external event,

Din uninterruptable sleep (typically waiting for disk IO to complete),

Tstopped (due to being traced),

Wpaging,

Xdead,

Zdefunct (or "zombie" state).

The following example illustrates count used to count the number of processes. The different attributes show how the different criteria are represented.

[process] count = imapd ❶ count = io_imapd : imapd [state=D] ❷ count = all_java : java ❸ count = tomcat_java : java [uid=tomcat5] ❹ count = zombies : [state=Z] ❺ count = tcat_z : java [uid=tomcat4, state=Z] ❻ count = run_as_root : [uid=0] ❼

Count the number of | |

Count the number of | |

Count the number of java processes that

are running. Store the number as a metric called

| |

Count the number of java processes that

are running as user | |

Count the total number of zombie processes. Store the

number as a metric called | |

Count the number of zombie tomcat

processes. Store the number as a metric called

| |

Count the number of processes running as |

Detailed information

The watch attribute specifies a process to monitor in detail. The process to watch is identified using the same format as with count statements; however, the expectation is that only a single process will match the criteria.

If there is more than one process matching the search criteria then one is chosen and that process is reported. In principle, the selected process might change from one time to the next, which would lead to confusing results. In practise, the process with the lowest pid is chosen, so is both likely to be the oldest process and unlikely to change over time. However, this behaviour is not guaranteed.

Much information is gathered with a watch

attribute. This information is documented in the

stat and status sections

of the proc(5) manual page. Some of the

more useful entries are copied below:

- pid

the process ID the the process being monitored.

- ppid

the process ID of the parent process.

- state

a single character, with the same semantics as the different process states listed above.

- minflt

number of minor memory page faults (no disk swap activity was required).

- majflt

number of major memory page faults (those requiring disk swap activity).

- utime

number of jiffies[1] of time spent with this process scheduled in user-mode.

- stime

number of jiffies[1] of time spent with this process scheduled in kernel-mode.

- threads

number of threads in use by this process.

Note

An accurate value is provided by the 2.6-series kernels. Under 2.4-series kernel with LinuxThreads, heuristics are used to derive a value. This value should be correct under most circumstances, but it may be confused if multiple instances of the same multi-threaded process is running concurrently.

- vsize

virtual memory size: total memory used by the process.

- rss

Resident Set Size: number of pages of physical memory a process is using (less 3 for administrative bookkeeping).

Attributes

- count string, optional

either the name of the process(es) to count, or the conditions processes must satisfy to be included in the count. This attribute may be repeated for multiple process counting.

count attributes have the form:

reported name:proc name[cond1,cond2, ...]- watch string, optional

either the name of the process to obtain detailed information, or the conditions a process must satisfy to be watched. This attribute may be repeated to obtain detailed information about multiple processes.

watch attributes have the form:

reported name:proc name[cond1,cond2, ...]

The stocks plugin uses one of the web-services provided by XMethods to obtain a near real-time quote (delayed by 20 minutes) for one or more stocks on the United States Stock market. Further details of this service are available from the Stocks service summary page.

In addition to providing financial information, stocks is

a pedagogical example that demonstrates the use of SOAP within

MonAMI.

Caution

The authors of MonAMI expressly disclaim the accuracy, adequacy, or completeness of any data and shall not be liable for any errors, omissions or other defects in, delays or interruptions in such data, or for any actions taken in reliance thereon.

Please do not send too many requests. A request every couple of minutes should be sufficient.

Attributes

- symbols string, required

a comma- (or space-) separated list of ticker symbols to monitor. For example,

GOOGis the symbol for Google Inc. andRHTis the symbol for RedHat Inc.

The tcp monitoring plugin provides information about the

number of TCP sockets in a particular state. Here, a socket is

either a TCP connection to some machine or the ability to

receive a particular connection (i.e., that the local machine is

“listening” for incoming connections).

A tcp monitoring target takes an arbitrary number of

count attributes. The value of a

count attributes describes how to report

the number of matching sockets and the criteria for including a

socket within that count. These attributes take values like:

name

[cond1,

cond2, ...]nameTCP sockets. The conditions

(cond1cond2state=ESTABLISHED). A socket must match all

conditions to be included in the count.

The condition keywords may be any of the following:

local_addrThe local

IPaddress to which the socket is bound. This may be useful on multi-homed machines for sockets bound to a single interface.remote_addrThe remote

IPaddress of the socket, if connected.local_portThe port on the local machine. This can be the numerical value or a common name for the port, as defined in

/etc/service.remote_portThe port on the remote machine, if connected. This can be the numerical value or a common name for the port.

portA socket's local or remote port must match. This can be the numerical value or a common name for the port.

stateThe current state of the socket. Each local socket will be in one of a number of states and changes state during the lifetime of a connection. All the states listed below are valid and may occur naturally on a working system; however, under normal circumstances some states are transitory: one would not expect a socket to stay in a transitory state for long. A large and/or increasing number of sockets in one of these transitory states might indicate a networking problem somewhere.

The valid states are listed below. For each state, a brief description is given and the possible subsequent states are listed.

- LISTEN

A program has indicated it will receive connections from remote sites.

Next: SYN_RECV, SYN_SENT

- SYN_SENT

Either a program on the local machine is the client and is attempting to connect to remote machine, or the local machine sends data from a LISTENing socket (less likely).

Next: ESTABLISHED, SYN_RECV or CLOSED

- SYN_RECV

Either a LISTENing socket has received an incoming request to establish a connection, or both the local and remote machines are attempting to connect at the same time (less likely)

Next: ESTABLISHED, FIN_WAIT_1 or CLOSED

- ESTABLISHED

Data can be sent to/from local and remote site.

Next: FIN_WAIT_1 or CLOSE_WAIT

- FIN_WAIT_1

Start of an active close. The application on local machine has closed the connection. Indication of this has been sent to the remote machine.

Next: FIN_WAIT_2, CLOSING or TIME_WAIT

- FIN_WAIT_2

Remote machine has acknowledged that local application has closed the connection.

Next: TIME_WAIT

- CLOSING

Both local and remote applications have closed their connections “simultaneously”, but remote machine has not yet acknowledged that the local application has closed the local connection.

Next: TIME_WAIT

- TIME_WAIT

Local connection is closed and we know the remote site knows this. We know the remote site's connection is closed, but we don't know if the remote site know that we know this. (It is possible that the last ACK packet was lost and, after a timeout, the remote site will retransmit the final FIN packet.)

To prevent the potential packet loss (of the local machine's final ACK) from accidentally closing a fresh connection, the socket will stay in this state for twice MSL timeout (depending on implementation, a minute or so).

Next: CLOSED

- CLOSE_WAIT

The start of a passive close. The application on the remote machine has closed its end of the connection. The local application has not yet closed this end of the connection.

Next: LASK_ACK

- LASK_ACK

Local application has closed its end of the connection. This has been sent to the remote machine but the remote machine has not yet acknowledged this.

Next: CLOSED

- CLOSED

The socket is not in use.

Next: LISTEN or SYN_SENT

- CONNECTING

A pseudo state. The transitory states when starting a connection match, specifically either SYN_SENT or SYN_RECV.

- DISCONNECTING

A pseudo state. The transitory states when shutting down a connection match, specifically any of FIN_WAIT_1, FIN_WAIT_2, CLOSING, TIME_WAIT, CLOSE_WAIT or LASK_ACK match.

The states ESTABLISHED and LISTEN are long-lived states. It is natural to find sockets that are in these states for extended periods.

For applications that use “half-closed” connections, the FIN_WAIT_2 and TIME_WAIT states are less transitory. As the name suggests, half-closed connections allows data to flow in one direction only. It is achieved by the application that no longer wishes to send data closing their connection (see FIN_WAIT_1 above), whilst the application wishing to continue sending data does nothing (and so suffers a passive close). Once the half-closed connection is established, the active close socket (which can no longer send data) will be in FIN_WAIT_2, whilst the passive close socket (which can still send data) will be in CLOSE_WAIT.

There are two pseudo states for the normal transitory states: CONNECTING and DISCONNECTING. They are intended to help catch networking or software problems.

The following example checks whether an application is listening on three well-known port numbers. This might be used as a check whether services are running as expected.

[tcp] name = listening count = ssh [local_port=ssh, state=LISTEN] count = ftp [port=ftp, state=LISTEN] count = mysql [local_port=mysql, state=LISTEN]

The following example records the number of connections to a

webserver. The established metric records the

connections where data may flow in either direction. The other

two metrics record connections in the two pseudo states. Normal

traffic should not stay long in these pseudo states; connections

that persist in these states may be symptomatic of some problem.

[tcp] name = incoming_web_con count = established [local_port=80, state=ESTABLISHED] count = connecting [local_port=80, state=CONNECTING] count = disconnecting [local_port=80, state=DISCONNECTING]

Attributes

- count string, optional

the name to report for this metric followed by square brackets containing a comma-separated list of conditions a socket must satisfy to be included in the count. This option may be repeated for multiple

TCPconnection counts.The conditions are keyword-value pairs, separated by

=, with the following valid keywords:local_addr,remote_addr,local_port,remote_port,port,state.The

statekeyword can have one of the followingTCPstates: LISTEN, SYN_RECV, SYN_SENT, ESTABLISHED, CLOSED, FIN_WAIT_1, FIN_WAIT_2, CLOSE_WAIT, CLOSING, TIME_WAIT, LASK_ACK; or one of the following two pseudo states: CONNECTING, DISCONNECTING.

Apache Tomcat is one of the projects from the Apache Software

Foundation. It is a Java-based application server (or servlet

container) based on Java Servlet and JavaServer Pages

technologies. Servlets and JSP are defined under Sun's Java

Community Process. More information about Tomcat can be found

at the Apache

Tomcat home page.

Also under development of the Java Community Process is the Java

Monitoring eXtensions (JMX). JMX provides a standard method

of instrumenting servlets and JSPs, allowing remote monitoring

and control of Java applications and servlets.

The tomcat plugin uses the JMX-proxy servlet to monitor

(potentially) arbitrary aspects of a Servlet and JSPs. This

provides structured plain-text output from Tomcat's JMX MBean

interface. Applications that require monitoring should connect to

that interface for MonAMI to discover their data.

To monitor a custom servlet, the required instrumentation within

the servlet/JSP must be written. Currently, there is an

additional light-weight conversion needed within MonAMI, adding

some extra information about the monitored data. Sample code

exists that monitors aspects of the Tomcat server itself.

Any tomcat monitoring target will need a username and

password that matches a valid account within the Tomcat server

that has the manager

role. This is normally configured in the file

$CATALINA_HOME/conf/tomcat-users.xml.

Including the following line within this file creates a new user

monami, with password

monami-secretmanager role, to

Tomcat.

<user username="monami" password="monami-secret" roles="manager"/>

This line should be added within the

<tomcat-users> context.

Warning

Be aware that Basic authentication sends the username and password unencrypted over the network. These values are at risk if packets can be captured. If you are not sure, you should run MonAMI on the same server as Tomcat.

In addition to connecting to Tomcat, you also need to specify which classes of information you wish to monitor. The following are available: ThreadPool and Connector. To monitor some aspect, you must specify the object type along with the identifier for that object within the monitoring definition. For example:

[tomcat] name = local-tomcat ThreadPool = http-8080 Connector = 8080

ThreadPool monitors a named thread pool (e.g.,

http-8080), monitoring the following

quantities:

- minSpareThreads

the minimum number of threads the server will maintain.

- currentThreadsBusy

the number of threads that are either actively processing a request or waiting for input.

- currentThreadCount

total number of threads within this ThreadPool.

- maxSpareThreads

if the number of spare threads exceeds this value, the excess are deleted.

- maxThreads

an absolute maximum number of threads.

- threadPriority

the priority at which the threads run.

The Connector monitors a ConnectorMBean and is identified by which port it listens on. It monitors the following quantities:

- allowTrace

Can we trace the output?

- clientAuth

Did the client authenticate?

- compression

Is the connection compressed?

- disableUploadTimeout

Is the upload timeout disabled?

- emptySessionPath

Is there no session?

- enableLookups

Are lookups enabled?

- tcpNoDelay

Is the

TCPSO_NODELAYflag set?- useBodyEncodingForURI

does the

URIcontain body information?- secure

are the connections secure?

- acceptCount

number of pending connections this Connector will accept before rejecting incoming connections.

- bufferSize

size of the input buffer.

- connectionLinger

how long the connection lingers, waiting for other connections.

- connectionTimeout

the timeout for this connection.

- connectionUploadTimeout

the timeout for uploads.

- maxHttpHeaderSize

the maximum size for

HTTPheader.- maxKeepAliveRequests

how many keep-alives before the connection is considered dead.

- maxPostSize

maximum size of the information

POSTed.- maxSpareThreads

c.f. ThreadPool

- maxThreads

c.f. ThreadPool

- minSpareThreads

c.f. ThreadPool

- threadPriority

c.f. ThreadPool

- port

the port on which this connector listens.

- poxyPort